-

Notifications

You must be signed in to change notification settings - Fork 0

Video Chat and Rooms Setup

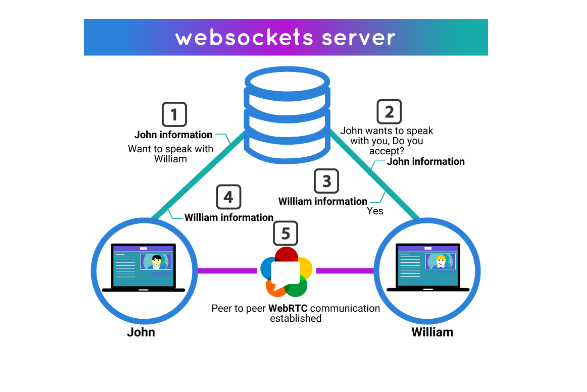

In this section, we go over the architecture of video chat using WebRTC as well as how we set up the video chat functionality and how we added rooms.

In order to make a video chat application, there are two main components: a signaling server and a client. The client connects to the signaling server and tells the server they are looking for another video participant. Because the client needs to stay connected to the server for the duration of the call, it needs to make a persistent connection. On web applications, this is done using a WebSocket.

Suppose our client is the doctor, waiting for the patient. When another client connects to the server (i.e., the patient), the server passes the patient's information to the doctor, and vice versa. If both parties accept, a video chat is started. The sharing of video itself is done using a protocol called WebRTC, which is the standard for web apps.

Having understood this architecture, we initially decided to write our own signaling server. Writing it ourselves is completely free and it allows us to test for however long we want without running into limits of a service's free tier. For a server that only handles two callers at a time, this is fairly straightforward.

We first created a Node.js server using express and socket.io. Express is a Javascript framework that makes it easy to create an API. Socket.io, on the other hand, is a library that simplifies the real-time communication provided by WebSockets by providing an API that is far more elegant and easier to use. These dependencies were installed using npm in the server folder.

In the server's main Javascript file, we wrote some initial ceremony to set up express and socket.io. Using socket.io, we listened for connecting clients on an event named "connection". Each client is handled with its own event callback function. This function handled different three main signaling events: "offer", "answer", and "ice-candidate".

io.on('connection', (socket) => {

socket.on('offer', (offer, targetSocketId) => {

socket.to(targetSocketId).emit('offer', offer, socket.id);

});

socket.on('answer', (answer, targetSocketId) => {

socket.to(targetSocketId).emit('answer', answer);

});

socket.on('ice-candidate', (candidate, targetSocketId) => {

socket.to(targetSocketId).emit('ice-candidate', candidate);

});

});In the context of video chat, an "offer" is a message that initiates the connection process. This message typically contains a session description protocol (SDP) data, describing the sender's connection constraints, media formats, etc. When an offer is received from a client, it's forwarded to another client, and the server emits an offer event to the target client, sending along the offer data and the socket ID of the sender.

Similar to the offer, an "answer" event is a response to an offer and also contains SDP data. It's sent by the client that received the offer to accept the connection. The server then forwards this "answer" message to the original sender of the "offer", using the target socket ID.

Finally, the ice-candidate messages are part of the ICE (Interactive Connectivity Establishment) framework used in WebRTC to find the best path for streaming media between peers. Each ICE candidate represents a potential network route for the P2P connection. When an ICE candidate is found, it's sent to the other client (the potential peer) to test the connectivity along that route. Handling these three events allowed us to create a signaling server sufficient for two participants.

After writing the server, we wrote a Vue view that will connect to the server and start a video call once another client is connected. Our template code was fairly simple — two video streams (one of the user's webcams and another for the other party's video) and a button to start the call.

<template>

<div>

<video id="localVideo" autoplay playsinline muted></video>

<video id="remoteVideo" autoplay playsinline></video>

<button @click="startCall">Start Call</button>

</div>

</template>In the setup script of this view, we used the socket.io client library in order to connect to the signaling server and handle the "offer", "answer", and "ice-candidate" events. The "offer" event is emmited when the user clicks the start call button. This means the client doesn't start searching for video recipients to connect until that button is pressed. The client validates connections on the "ice-candidate" event, as expected, and adds the recipient's video stream on the "answer" event.

const socket = io('http://localhost:3000');

const localConnection = new RTCPeerConnection();

const localVideo = ref(null);

const remoteVideo = ref(null);

onMounted(() => {

navigator.mediaDevices.getUserMedia({ video: true, audio: true })

.then(stream => {

localVideo.value.srcObject = stream;

stream.getTracks().forEach(track => localConnection.addTrack(track, stream));

});

});

localConnection.onicecandidate = event => {

if (event.candidate) {

socket.emit('ice-candidate', event.candidate);

}

};

localConnection.ontrack = event => {

remoteVideo.value.srcObject = event.streams[0];

};

socket.on('offer', async (offer, fromSocketId) => {

const remoteOffer = new RTCSessionDescription(offer);

await localConnection.setRemoteDescription(remoteOffer);

const answer = await localConnection.createAnswer();

await localConnection.setLocalDescription(answer);

socket.emit('answer', answer, fromSocketId);

});

socket.on('answer', async answer => {

const remoteAnswer = new RTCSessionDescription(answer);

await localConnection.setRemoteDescription(remoteAnswer);

});

socket.on('ice-candidate', candidate => {

localConnection.addIceCandidate(new RTCIceCandidate(candidate));

});

const startCall = async () => {

const offer = await localConnection.createOffer();

await localConnection.setLocalDescription(offer);

socket.emit('offer', offer);

};Our initial WebSocket signaling server was fine when we only wanted to have a maximum of two parties (i.e., one call) happening at a given time. The next natural step was to add support for rooms, so that multiple doctors and patients can be using the service at once and doctors can send a code to the patient ahead of time. After looking at how much code would be needed to add to the server to add this functionality, we decided to scrap our custom server and use Agora.io instead. Its free tier was plenty for our testing — up to 10,000 minutes of video per month — and its SDK made adding rooms quite effortless.

client.on("user-published", onPublished);

client.on("user-unpublished", onUnPublished);

async function onPublished(

user: IAgoraRTCRemoteUser,

mediaType: "video" | "audio"

) {

await client.subscribe(user, mediaType);

if (mediaType === "video") {

const remoteVideoTrack = user.videoTrack;

if (remoteVideoTrack) {

remoteVideoTrack.play("remote-video");

isVideoSubed.value = true;

}

}

if (mediaType === "audio") {

const remoteAudioTrack = user.audioTrack;

if (remoteAudioTrack) {

remoteAudioTrack.play();

isAudioSubed.value = true;

}

}

}

async function onUnPublished(

user: IAgoraRTCRemoteUser,

mediaType: "video" | "audio"

) {

await client.unsubscribe(user, mediaType);

if (mediaType === "video") {

isVideoSubed.value = false;

}

if (mediaType === "audio") {

isAudioSubed.value = false;

}

}We now only need two event handlers in the setup script, one for when a user joins (i.e., subscribes) and one for when a user leaves (i.e., unsubscribes). When a user decides to host a meeting, a room code is generated (6-char string of letters and numbers) and they join that "channel" on Agora's server. When another party joins that room, the onPublished handler is called, which adds the party's video stream. Similarly, when a party leaves the room, the onUnPublished function is called, which removes that video stream.

To summarize, adding video chat functionality requires a signaling server and a client that can connect to it. We started our journey into video chat by creating a WebSocket server but ended up pivoting to Agora due to its significantly higher ease-of-use. Using Agora's channel feature, we were easily able to add rooms to our telehealth app. This is vital because each patient needs to have a private video chat room to meet with their doctor.

https://webrtc.ventures/wp-content/uploads/2017/10/websocket-server.png

https://api-ref.agora.io/en/voice-sdk/web/4.x/index.html

Arvind Kasiliya